New COSMOS system manager to push forward innovation

Dr Juha Jäykkä

On 16th January the CTC will be joined by Dr Juha Jäykkä, who will take over from Andrey Kaliazin as System Manager of the COSMOS Supercomputer, part of the STFC DiRAC HPC Facility.

Juha has a PhD in theoretical physics from the University of Turku, Finland, and has worked as a postdoctoral researcher in topological solitons. In research support roles he has managed the local part of a HPC cluster acquisition in a multi-university procurement process, acted as an HPC cluster administrator and as a support scientist for Cray supercomputer users, where his responsibilities included code optimisation and porting. Professor Paul Shellard, CTC Director said, "The COSMOS consortium eagerly welcomes Dr Juha Jäykkä with his unique background in mathematical physics, HPC applications support and operations management; we are confident his excellent skill set will push forward our world-leading developmental work in both shared-memory and MIC accelerator programming paradigms."

Supercomputing 2013: COSMOS Xeon Phi results

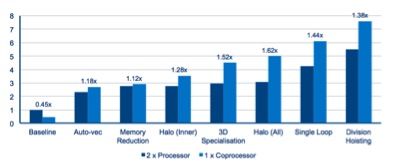

We are pleased to report new progress with MIC (many-integrated core) programming which was reported in Denver at Supercomputing 2013. The COSMOS team, in collaboration with Intel, has so far successfully ported two consortium codes to the Xeon Phi, namely Walls and CAMB. The Walls code simulates the evolution of domain walls in the early Universe. It is a grid-based stencil code that evolves a scalar field through time and calculates the area of any domain walls that form. The code was already parallelized via pure OpenMP and has run on several generations of Cosmos. The code was run with a 480^3 problem and performance comparisons are between 2 x Intel® Xeon® E5-4650L (Sandybridge) processors and 1 x Intel® Xeon Phi 5110P coprocessor. Out of the box, the Walls code was ~2x faster on two Xeon sockets than on Xeon Phi. Following some fairly straightforward modifications to the algorithm the code was improved via reducing the memory footprint, increasing the vectorisation, and making the code more cache friendly. All in all, after approximately 3-4 weeks of programmer time, the Walls code was sped up by a factor of 5.51x on 2 Xeon's, and 17x on Xeon Phi. The Xeon Phi version is 1.38x faster than the 2 Sandybridge Xeon's. The code is now being ported to use multiple MICs with a view to running the biggest ever domain wall simulation, accelerated by Xeon Phi. These results were reported in a 15-minute talk at Supercomputing 2013 by Jim Jeffers (Intel); these results are the successful outcome of an ongoing collaboration between James Briggs (COSMOS) and John Pennycook (Intel).

Speed-up of Walls on 2x Xeon and 1 Xeon Phi with various optimisations. The speed-up is measured against the original runtime of the unimproved code run on 2x Xeons.

CAMB is the key cosmological parameter estimation code used by the Planck satellite consortium. Several instances of this code can now be run on a single Xeon Phi card. The multiple Xeon Phi COSMOS system has allowed Planck analysis to be farmed out to the Phi's, increasing analysis throughput especially for high accuracy cases, while decreasing the load on the rest of the system. Extensive parameter estimation surveys for new models have been undertaken using Planck likelihood data.

Shared-memory programming advances

The major COSMOS programming innovation on the SMP front has been a recent success with the creation of a dense, symmetric, real eigensolver for the UV2000 in collaboration with Cheng Liao from SGI. The solver is composed of routines from state-of-the-art tiled linear algebra libraries, which are parallelized via dynamic task-based scheduling. This makes them much more efficient and scalable on modern multi-core NUMA systems than the current LAPACK implementations. After the eigensolver was constructed it was then tuned for memory and thread placement to achieve the best efficiency on COSMOS.

The new eigensolver is currently utilized to great effect in the ExoMol project, which has a complicated SMP pipeline which runs on COSMOS. Recently, a 130,000^2 matrix was diagonalised using 14 nodes on COSMOS in 4.5 hours. The equivalent using ScaLAPACK took 7 hours. The aim is now to test COSMOS 15TB shared-memory limits by analysing 650,000^2 matrices, opening up new ExoMol (and other science) frontiers. John Cheng (SGI) has led developments here, with collaborative support from James Briggs.

James Briggs, COSMOS programmer, joined the team in April 2013 with an MPhys in Computational Physics from Edinburgh and experience in astrophysics research.

COSMOS